AI Conversations Made Easy: Building Voice Recognition with OpenAI, Gradio, and ElevenLabs

Voice recognition technology is transforming the way we interact with our devices and applications. As an experienced software engineer, I’m thrilled to share my insights on creating a voice recognition tool using OpenAI, Gradio, and ElevenLabs. This article will guide you through the entire process, highlighting best practices and providing code snippets for each step. By the end, you’ll have a comprehensive understanding of the necessity of each point and a strong foundation to build your own voice recognition tool.

Setting up the environment

To begin, we need to set up our development environment. Make sure you have Python 3.6 or later installed. Next, install the necessary libraries using pip:

pip install openai gradio elevenlabsObtaining API keys

To use OpenAI and ElevenLabs, you’ll need to obtain API keys for both services. Sign up for an account on their respective websites and note your API keys.

- OpenAI: https://beta.openai.com/signup/

- ElevenLabs: https://www.elevenlabs.ai/sign-up

OPENAI_API_KEY = "your_openai_api_key"

ELEVENLABS_API_KEY = "your_elevenlabs_api_key"Converting the response to speech Integration of ElevenLabs for vocal responses

With the response generated, we’ll now convert it to speech using ElevenLabs’ API. This function will take the generated text and return an audio file.

In order to integrate ElevenLabs for vocal responses, replace the subprocess.call(["say", system_message['content']]) line with the following code snippet:

import requests

def text_to_speech(text):

headers = {

"Authorization": f"Bearer your_elevenlabs_api_key",

"Content-Type": "application/json",

}

data = {

"text": text,

"voice": "en-US-Wavenet-A",

}

response = requests.post("https://api.elevenlabs.ai/tts", json=data, headers=headers)

return response.content

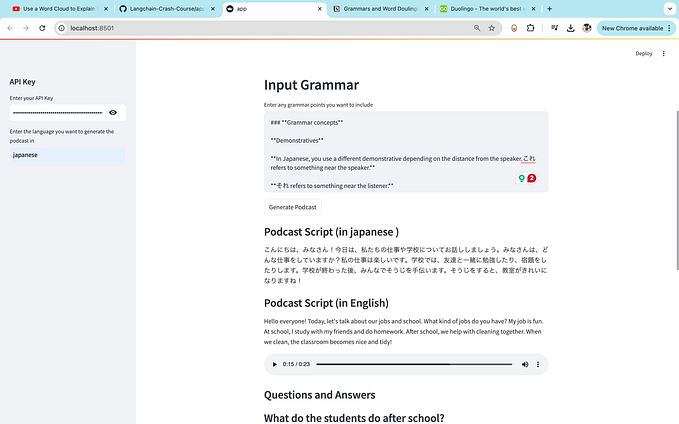

audio_response = text_to_speech(system_message['content'])Creating a Gradio interface

With our core functions in place, let’s create a Gradio interface that connects everything together. We’ll create a function called process_voice_input that takes an audio input, transcribes it, generates a response, and returns the audio response.

import gradio as gr

def process_voice_input(audio):

transcription = transcribe_voice(audio)

response_text = generate_response(transcription)

audio_response = text_to_speech(response_text)

return audio_response

# Define input and output types for Gradio

inputs = gr.inputs.Audio(source="microphone", type="file")

outputs = gr.outputs.Audio(type="auto")

# Create the Gradio interface

interface = gr.Interface(fn=process_voice_input, inputs=inputs, outputs=outputs, title="Voice Recognition Tool")Write the main script

Create a script called voice_recognition_tool.py with the following code:

import gradio as gr

import openai, config, os, requests

openai.api_key = config.OPENAI_API_KEY

# Function to transcribe audio to text using OpenAI

def transcribe_audio(audio):

audio_file = open(audio, "rb")

transcript = openai.Audio.transcribe("whisper-1", audio_file)

return transcript["text"]

# Function to generate a response using OpenAI GPT-3.5 with a persona

def generate_response(prompt):

messages = [

{"role": "system", "content": 'You are an engineer. Respond to all input in 40 words or less.'},

{"role": "user", "content": prompt},

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages,

max_tokens=150,

n=1,

stop=None,

temperature=0.5,

)

return response.choices[0].message['content'].strip()

# Function to convert text to speech using ElevenLabs

def text_to_speech(text):

headers = {

"Authorization": f"Bearer {config.ELEVENLABS_API_KEY}",

"Content-Type": "application/json",

}

data = {

"text": text,

"voice": "en-US-Wavenet-A",

}

response = requests.post("https://api.elevenlabs.ai/tts", json=data, headers=headers)

return response.content

# Main function to process voice input and generate a vocal response

def process_voice_input(audio):

transcription = transcribe_audio(audio)

response_text = generate_response(transcription)

audio_response = text_to_speech(response_text)

return audio_response

# Create and launch the Gradio interface

inputs = gr.inputs.Audio(source="microphone", type="file")

outputs = gr.outputs.Audio(type="auto")

interface = gr.Interface(fn=process_voice_input, inputs=inputs, outputs=outputs, title="Voice Recognition Tool")

interface.launch()Run the script

Finally, execute the script by running the following command in your terminal:

python voice_recognition_tool.pyThis will launch the Gradio interface in your default web browser. You can now interact with the voice recognition tool: record your voice input and receive a vocal response generated by OpenAI GPT-3.5 and synthesized by ElevenLabs.

In this article, we’ve walked through the process of developing a voice recognition tool using OpenAI, Gradio, and ElevenLabs. By following the steps and integrating the provided code snippets, you can now create a powerful voice recognition tool that can transcribe user input, generate meaningful responses